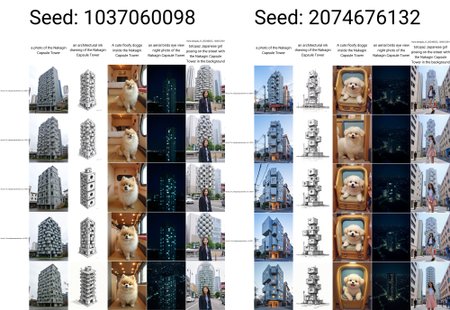

base F1.d doesn't know this building at all, so this is a training experiment for me to better understand how to train F1D LoRA models for non-people/non-characters.

I'm pretty satisfied with v5, I don't think I will be training any more versions unless I find out something revolutionary.

My training notes

v5 - excellent, i am satisfy

v5 works with onsite generator but isn't as consistent as local generation

gets general size and proportions right, and finally picked up on the two metal spires atop the building

interior geometry is consistent, size (of room) is acceptable too

I don't think I will train any more versions, but if i do, i would address

reduce film grain/analogue film effect

pods are slightly fuzzy (i think because some of the training images had them with demolition netting over)

interiors always tend to generate hardwood floors

sky tends to be overexposed (will have to photoshop dataset or find new images)

switching to Ostris' ai-toolkit

network rank (and alpha) increased to 32

4000 steps

LR 1e-4 (default for flux preset)

explicit triggerword set

nctower

v4 - interior better, exterior worse

It's kind of bad at replicating the exterior, but it is getting some of the interior geometries right

network rank seems to be max 16 with my current simpletuner setup, i can't seem to make it any higher

LR reset to default

changed to CosineAnnealingWarmRestarts scheduler, 4 restarts

max steps reverted to 2000

v3 something went horribly wrong

Garbage output, maybe doubling LR wasn't the best idea?

Dataset updated (more variety, particularly added more that depicted people, more detailed captions)

all settings reset to default (same as V1), except:

max steps reduced to 1800

learning rate doubled to 10e4

network rank doubled to 32somehow the setting didn't change?

v2 LoRA is okay

I increased the batch size 4x, and as a result it was trained for much longer

(2000 steps × batch size 4, I cut it off at 1500 as it was taking way too long on my rented hourly rate GPU)it gets the exterior appearance consistent,

it is starting to understand the qualities of the interior rooms.

it is not overtrained or "burned"; it can render other types of towers just fine in the same prompt

I think it needs a higher network rank to fully understand both interior and exterior qualities.

v1 LoRA is undertrained

using sinmpletuner

default learning rate, 2000 steps × batch size 1

exterior appearance: it understands the individual pods but rarely arranges them in the same type of quasi-random geometry

interior: it understands the narrow room with single round window, but not much else