Overview

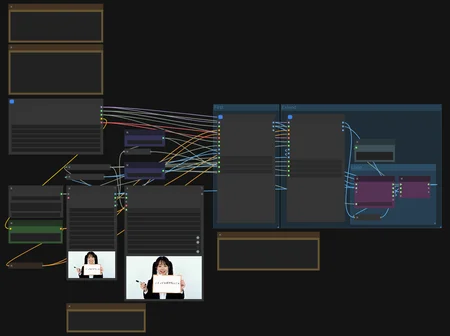

This workflow uses Wan2.1 InfiniteTalk to perform native V2V lip sync.

Even if the input video is long, the workflow will automatically repeat the extension process as needed.

What This Workflow Does

Using the automatic segmentation feature of Florence2Run + SAM2, a face mask is generated and then re-rendered with InfiniteTalk.

This keeps motion outside the face faithful to the original video, while maintaining facial consistency and applying accurate lip sync.

Notes

Depending on the original video's frame count, the output may be rounded down, resulting in the video being 1–3 frames shorter.

The length is calculated from the latent frame count n using the formula:

(n - 1) * 4 + 1

Because of this rule, it is not possible to generate more frames than exist in the source video.

For example, if the final chunk has 14 frames remaining, the selectable lengths would be 13 or 17.

However, since frames 15–17 do not exist in the source video, they cannot be generated.

As a result, the length is rounded down.

If anyone has a good idea to improve this limitation, suggestions are welcome.

Description

First Release