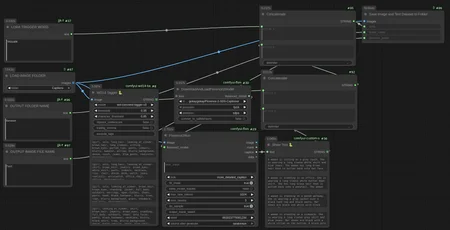

This workflow automatically generates clean, structured image captions by combining WD14 tagging, Florence-style natural language descriptions, and a custom trigger token for training consistency. The idea behind this workflow is to deliver proven results for easily (one-click) captioning datasets for training. I have made many high quality LORA from the datasets this workflow outputs.

Designed for:

Dataset preparation

LoRA / model training

Caption inspection & QA

Prompt reconstruction workflows

The examples shown are direct outputs from the workflow, displayed exactly as generated. No LLM or API keys needed. If you’re tired of messy captions or inconsistent datasets, this keeps everything clean, readable, and repeatable in one easy simple workflow.

What This Workflow Does

Uses WD14 to extract high-quality tag metadata

Uses Florence to generate a natural-language image description

Injects a custom trigger token at the start of every caption

Outputs both tags + descriptive text in a single caption block

Saves captions to a user-defined folder inside

ComfyUI/output

This keeps captions:

Consistent

Human-readable

Training-friendly

📁 Important Setup Note (VERY IMPORTANT)

You must create a folder inside:

ComfyUI/input/Example:

ComfyUI/input/CaptionsThen select that folder in the caption loader node. If the folder does not exist, the workflow will not write captions correctly as it doesn't know which directory to load from.

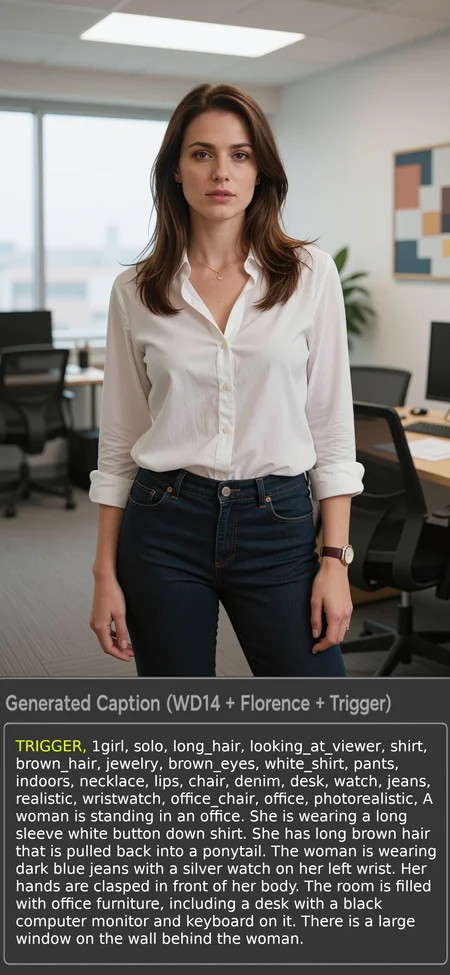

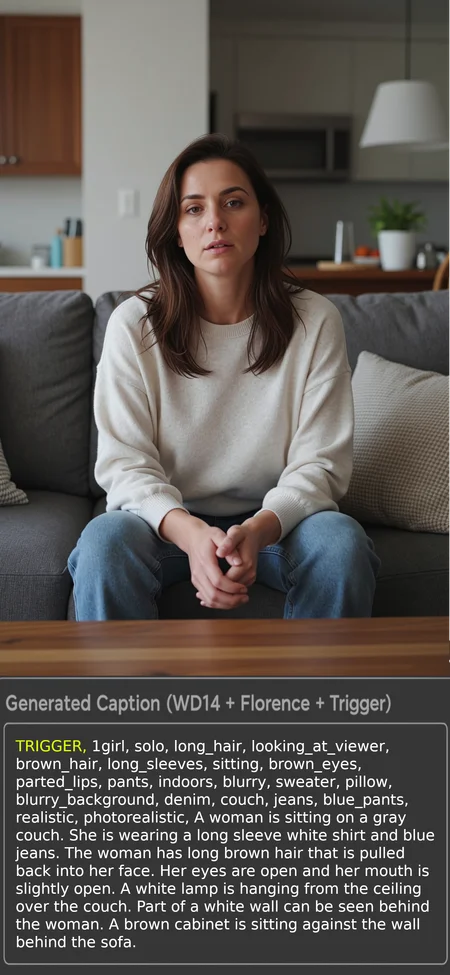

About the Example Images

Each example image shows:

The original image

A caption panel

Nothing is manually edited or rewritten — this is raw workflow output for transparency and accuracy.

Example Output Structure

Captions follow this format:

TRIGGER, wd14_tags_here,

florence_generated_description_hereThis structure is ideal for:

LoRA trigger anchoring

Dataset reuse

Easy downstream parsing

Recommended Use Cases

LoRA training datasets

SDXL / Flux / Wan caption generation

Dataset cleanup & validation

Prompt reverse-engineering

Caption benchmarking

Requirements

ComfyUI (recent build)

Must include comfy-core ≥ 0.3.75

Required for BETA Dataset nodes:

LoadImageDataSetFromFolderSaveImageTextDataSetToFolderStringConcatenate

Custom Node Packs

comfyui-florence2 (v1.0.6)

comfyui-wd14-tagger (v1.0.0)

comfyui-custom-scripts (pysssss) (v1.2.5)

ComfyUI-Jjk-Nodes

Models

Florence-2-SD3-Captioner

Repo:

gokaygokay/Florence-2-SD3-Captioner

WD14 Tagger Model

wd-convnext-tagger-v3

Folder Setup

Create a folder in

ComfyUI/input/Example used in workflow:

ComfyUI/input/Captions

Description

Base version