ComfyUI for Linux (Install Script) / Windows (Portable Version)

Linux

first open the script in a text editor and adjust the pathes and versions, currently its:

PYTHON_VERSION="3.12.10" # Python version to install via pyenv

VENV_PATH="/mnt/daten/AI/comfy_env" # Virtual environment location

PYTORCH_VERSION="2.9" # PyTorch major.minor version for wheel URLs (e.g., 2.9, 2.10)

PYTORCH_FULL_VERSION="2.9.1+cu128" # Full PyTorch version for pip install e.g., 2.9.1+cu128, 2.10.0+cpu)

PYTORCH_INDEX_URL="https://download.pytorch.org/whl/cu128" # PyTorch index URL (cu128, cu121, cpu)

NUMPY_VERSION="2.2.6" # NumPy version (2.2.x compatible with PyTorch 2.9+)

TRANSFORMERS_VERSION="4.57.3" # Transformers version (5.x for Mistral3/Ministral3 support, breaks florence2)

COMFYUI_FRONTEND_VERSION="1.36.13" # ComfyUI frontend version (installed in venv's site-packages)

# ComfyUI installation configuration

COMFYUI_PARENT_DIR="/mnt/daten/AI" # Parent directory where ComfyUI will be cloned

COMFYUI_DIR_NAME="ComfyUI" # ComfyUI folder name (change if you want a different name or multible versions)

COMFYUI_VERSION="v0.8.2" # ComfyUI version to checkout (tag, branch, or commit SHA). Leave empty for latest.

CREATE_SYMLINKS=true # Set to false to skip symlink creation

USER_MODELS_PATH="/mnt/daten/AI/models" # Your centralized models directory

USER_OUTPUT_PATH="/mnt/daten/AI/output" # Your centralized output directory

USER_CUSTOM_NODES_PATH="/mnt/daten/AI/custom_nodes" # Your centralized custom_nodes directory (shared across ComfyUI installations)

INSTALL_NUNCHAKU=true # Set to false to skip Nunchaku (NVIDIA GPU required)

to execute the script open a bash in the folder where you've extracted the file and type chmod +x install_comfy_env.sh after that you can execute it with a right click of your mouse on the script and select execute as app.

if you do all steps (default) the script will:

install pyenv (if not existing),

the selected python version,

create the comfy_env (venv) and

installs comfyui.

it also clones a set of custom nodes that are needed for my workflows (check the script, the block starts at line 551).

in its current state it installs python 3.12.10, pytorch 2.9.1 cu128, nunchaku 1.2.1 package, facexlib, insightface etc. it installs the requirements of all cloned custom nodes.

it also adds code to the .bashrc if selected. that way you can start comfyui faster by typing comfyui in the bash. but it also creates a startup script that allows you to start comfyui (right click on start-comfyui.sh and execute as app).

the final steps in the script is forcing the versions that are setup at the beginning. e.g. pytorch 2.9.1, transformers 4.57.3, numpy 2.2.6 because those are most likely overwritten by the requirements of the custom nodes (like reactor, facenet_pytorch etc.).

for the different distros there is a package manager detection so it should work no matter what distro you use. this is only used for the pyenv/python setup in Step 1 all other installations are done with pip.

Debian/Ubuntu: apt-get

Fedora/RHEL: dnf (my system)

CentOS/older RHEL: yum

Arch Linux: pacman

openSUSE: zypper

in the last update i've added step 11 which creates 2 aliases now

comfyui (starts comfyui directly)

envact (activates the venv)

I've also added a Multi-shell detection to write the aliases to the correct files:

Bash → adds aliases to ~/.bashrc

Zsh → adds aliases to ~/.zshrc

Fish → adds functions to ~/.config/fish/config.fish (Fish uses different syntax)

Fallback → uses ~/.profile if no common shells detected

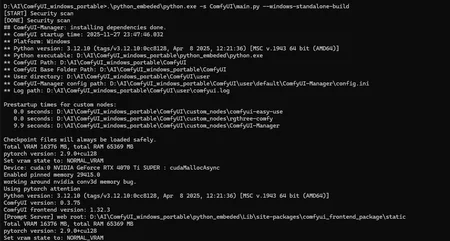

since im not using windows anymore there will be no further updates for the portable versions 🤷🏻.

Version 0.8.2

pyTorch 2.9+cu128

python: 3.12.10

Frontend: 1.36.13

removed crystools -> replaced with crystools-monitor only

Even though some API imports have been fixed, the deprecated messages are still displayed.

the reason for adding the forked versions instead of the originals is that some of the nodes are currently not working with the latest comfyui version.

Additional Installed Libs

triton 3.51.1

llama_cpp_python 0.3.16

flash attention 2 (v2.8.3+torch2.9+cu128-cp312)

nunchaku-1.0.2+torch2.9-cp312-cp312

sage attention 2.2.0

transformers==4.56.2

insightface, facexlib, facenet_pytorch, comfy-cli, bitsandbytes, tensorflow, hf_xet, requests, pilgram, tf-keras, setuptools_scm, qwen-vl-utils, huggingface-hub, accelerate, Pillow, opencv-python, safetensors, psutil, PyYAML, aiohttp, av, gguf, ultralytics, pytorch-extension

python headers for torch compile/triton

Installed Custom Nodes

comfy-mtb

comfyui_birefnet_ll

comfyui_controlnet_aux

ComfyUI_Eclipse 1.2.1

comfyui_essentials

comfyui_essentials_mb

comfyui_layerstyle

ComfyUI_LayerStyle_Advance

ComfyUI_Patches_ll (forked and fixed Imports)

comfyui_pulid_flux_ll

comfyui_ttp_toolset

comfyui-advanced-controlnet

ComfyUI-Crystools-MonitorOnly (forked and fixed small error in browser log)

ComfyUI-Custom-Scripts (forked and fixed API Imports)

comfyui-detail-daemon

comfyui-easy-use

comfyui-florence2

comfyui-frame-interpolation

ComfyUI-GGUF

ComfyUI-GIMM-VFI

comfyui-impact-pack

comfyui-kjnodes

ComfyUI-Manager (removed the anoying fetch msg output)

ComfyUI-nunchaku (forked and fixed Imports)

ComfyUI-ReActor

comfyui-supir

ComfyUI-TeaCache (forked and fixed Imports)

comfyui-videohelpersuite

ComfyUI-WanVideoWrapper

raffle

RES4LYF (forked and partially fixed (community pull requests))

rgthree-comfy (forked and fixed API Imports)

sd-dynamic-thresholding

was-ns

Version 0.3.75

(You will see some warnings from various nodes because the authors of comfyui are changing the API. -> [DEPRECATION WARNING])

Frontend Version: 1.32.3 (fix)

i've uploaded a patch for the latest frontend version if you want to upgrade to 1.34.3:

https://civarchive.com/articles/23392/patch-for-the-comfyui-frontend

pyTorch 2.9+cu128

python: 3.12.10

Additional Installed Libs

triton 3.51.1

llama_cpp_python 0.3.16

flash attention 2 (v2.8.3+torch2.9+cu128-cp312)

nunchaku-1.0.2+torch2.9-cp312-cp312

sage attention 2.2.0

transformers==4.56.2

facenet_pytorch, pytorch-extension, facexlib, insightface, comfy-cli, bitsandbytes, tensorflow, hf_xet, requests, pilgram, tf-keras, nvidia-ml-py

python headers for torch compile/triton

Installed Custom Nodes

comfy-mtb

comfyui_birefnet_ll

comfyui_controlnet_aux

ComfyUI_Eclipse (1.0.60)

comfyui_essentials

ComfyUI_essentials_mb

comfyui_layerstyle

ComfyUI_LayerStyle_Advance

comfyui_patches_ll

comfyui_pulid_flux_ll

comfyui_ttp_toolset

comfyui-advanced-controlnet

ComfyUI-Crystools

comfyui-custom-scripts

comfyui-depthanythingv2

comfyui-detail-daemon

comfyui-easy-use

comfyui-florence2

comfyui-frame-interpolation

ComfyUI-GGUF

ComfyUI-GIMM-VFI

comfyui-impact-pack

comfyui-impact-subpack

comfyui-inspire-pack

comfyui-jdcn

comfyui-kjnodes

ComfyUI-Manager

comfyui-multigpu

ComfyUI-nunchaku

comfyui-reactor

comfyui-supir

comfyui-videohelpersuite

ComfyUI-WanMoeKSampler

ComfyUI-WanVideoWrapper

facerestore_cf

raffle

RES4LYF

rgthree-comfy

sd-dynamic-thresholding

sdxl_prompt_styler

teacache

was-ns

whiterabbit

Description

using frontend version 1.32.3 with a fix

downgraded torch from 2.9.1 to 2.9.0

the first start may take a while, i've removed all pycache folders