🎞️ ComfyUI Image-to-Video Workflow - WAN 2.1 Wrapper (Kiko WAN v3)

This is a high-performance, multi-pass Image-to-Video workflow for ComfyUI, powered by the WAN 2.1 Wrapper, with advanced optimizations like torch.compile and Sage Attention for faster and smarter frame generation. I tried to expose all the settings that Kijai exposes that I can understand 😉, This is not the fastest workflow you will find on here, but it is one I use to make 20 secons videos.

Crafted with ❤️ on Arch Linux BTW, using an RTX 4090 and 128 GB of RAM—this setup is tuned for heavy-duty inference and silky-smooth video generation.

🚀 Features

🧠 WAN 2.1 Wrapper for cinematic image-to-video transformations

🔂 Two-pass generation: initial + refinement/extension

🐌 Optional Slow Motion + Frame Interpolation (RIFE, FILM, etc.)

🧽 Sharpening and Upscaling (e.g., RealESRGAN, SwinIR)

🛠️ Includes torch.compile for faster inference

🌀 Integrates Sage Attention for improved attention efficiency

📏 Customizable prompts, seed, duration, and aspect ratio logic

🌀 Final loop polish with "Extend Last Frame"

⚙️ System Specs

OS: Arch Linux (rolling release)

GPU: NVIDIA RTX 4090 (24GB VRAM)

RAM: 128 GB DDR5

Python: 3.12.9 via

pyenvComfyUI: Latest build from GitHub

torch: 2.x with

torch.compileenabledSage Attention: Enabled via patched attention mechanism

🛠️ Workflow Overview

🔹 Input & Resize

Drop an image and optionally resize to fit WAN 2.1's expected input.

🔹 WAN 2.1 Wrapper Core

Uses

torch.compilefor speed boostEnhanced with Sage Attention (set via the custom node or environment)

🔹 Pass 1: Generate + Optional Slow Motion

Frame-by-frame synthesis

Add slow motion via interpolation node (RIFE or FILM)

🔹 Pass 2: Extend + Merge

Extends the motion, ensures smoother transitions

Combines motion with refined prompt guidance

🔹 Final Polish

Sharpening and Upscaling

Final interpolation if needed

Loop-ready output by extending the last frame

🧪 Performance Tips

Tune torch compile for you system, they are all different, my setting might not work for you.

For Sage Attention:

Use the node

Running on lower-end GPUs? Disable upscaling and reduce frame count.

🧰 Requirements

ComfyUI

WAN 2.1 Wrapper Node

Optional:

RIFE,FILM, orDAINfor interpolationRealESRGAN/SwinIRfor upscalingSage Attentionpatch or node

▶️ How to Use

Load the

kiko-wan-v3.jsonfile into ComfyUI.Drop your image into the input node.

Customize prompts, duration, and frame count.

Click

Queue Promptto generate.Your video will be rendered in the output folder.

📁 Files

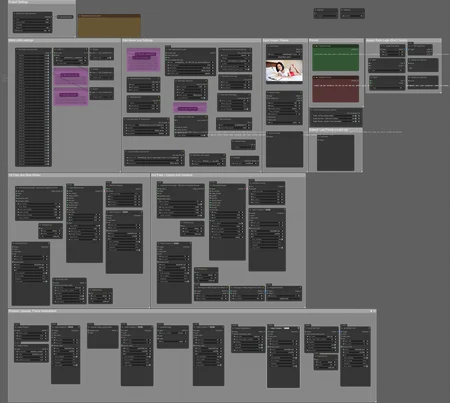

kiko-wan-v3.json— Exported workflow (coming soon)kiko-wan-v3.png— Workflow diagram

🧠 Inspirations & Credits

Real-ESRGAN, RIFE, FILM, Sage Attention contributors

Arch Linux + NVIDIA ecosystem for elite workstation performance 😉

💡 Future Plans

Add batch image-to-video mode

Audio?

⚙️ Custom Nodes Used in kiko-wan-wrapper-v3.json

Anything Everywhere: https://github.com/chrisgoringe/cg-use-everywhere

Display Any (rgthree): https://github.com/rgthree/rgthree-comfy

Fast Bypasser (rgthree): https://github.com/rgthree/rgthree-comfy

Fast Groups Bypasser (rgthree): https://github.com/rgthree/rgthree-comfy

GetImageRangeFromBatch: https://github.com/kijai/ComfyUI-KJNodes

GetImageSize+: https://github.com/cubiq/ComfyUI_essentials

Image Filter: https://github.com/chrisgoringe/cg-image-filter

ImageBatchMulti: https://github.com/kijai/ComfyUI-KJNodes

ImageFromBatch+: https://github.com/cubiq/ComfyUI_essentials

ImageListToImageBatch: https://github.com/ltdrdata/ComfyUI-Impact-Pack

ImageResizeKJ: https://github.com/kijai/ComfyUI-KJNodes

LoadWanVideoClipTextEncoder: https://github.com/kijai/ComfyUI-WanVideoWrapper/

LoadWanVideoT5TextEncoder: https://github.com/kijai/ComfyUI-WanVideoWrapper/

MarkdownNote: NOT FOUND

PlaySound|pysssss: https://github.com/pythongosssss/ComfyUI-Custom-Scripts

ProjectFilePathNode: https://github.com/MushroomFleet/DJZ-Nodes

RIFE VFI: https://github.com/Fannovel16/ComfyUI-Frame-Interpolation

ReActorRestoreFace: https://github.com/Gourieff/ComfyUI-ReActor

Seed Generator: https://github.com/giriss/comfy-image-saver

SimpleMath+: https://github.com/cubiq/ComfyUI_essentials

Text Input [Dream]: https://github.com/alt-key-project/comfyui-dream-project

VHS_VideoCombine: https://github.com/Kosinkadink/ComfyUI-VideoHelperSuite

WanVideoBlockSwap: https://github.com/kijai/ComfyUI-WanVideoWrapper/

WanVideoDecode: https://github.com/kijai/ComfyUI-WanVideoWrapper/

WanVideoEnhanceAVideo: https://github.com/kijai/ComfyUI-WanVideoWrapper/

WanVideoFlowEdit: https://github.com/kijai/ComfyUI-WanVideoWrapper/

WanVideoImageClipEncode: https://github.com/kijai/ComfyUI-WanVideoWrapper/

WanVideoLoopArgs: https://github.com/kijai/ComfyUI-WanVideoWrapper/

WanVideoLoraBlockEdit: https://github.com/kijai/ComfyUI-WanVideoWrapper/

WanVideoLoraSelect: https://github.com/kijai/ComfyUI-WanVideoWrapper/

WanVideoModelLoader: https://github.com/kijai/ComfyUI-WanVideoWrapper/

WanVideoSLG: https://github.com/kijai/ComfyUI-WanVideoWrapper/

WanVideoSampler: https://github.com/kijai/ComfyUI-WanVideoWrapper/

WanVideoTeaCache: https://github.com/kijai/ComfyUI-WanVideoWrapper/

WanVideoTextEncode: https://github.com/kijai/ComfyUI-WanVideoWrapper/

WanVideoTorchCompileSettings: https://github.com/kijai/ComfyUI-WanVideoWrapper/

WanVideoVAELoader: https://github.com/kijai/ComfyUI-WanVideoWrapper/

WanVideoVRAMManagement: https://github.com/kijai/ComfyUI-WanVideoWrapper/

Description

🎞️ ComfyUI Image-to-Video Workflow - WAN 2.1 Wrapper (Kiko WAN v3)

This is a high-performance, multi-pass Image-to-Video workflow for ComfyUI, powered by the WAN 2.1 Wrapper, with advanced optimizations like torch.compile and Sage Attention for faster and smarter frame generation. I tried to expose all the settings that Kijai exposes that I can understand 😉, This is not the fastest workflow you will find on here, but it is one I use to make 20 secons videos.

Crafted with ❤️ on Arch Linux BTW, using an RTX 4090 and 128 GB of RAM—this setup is tuned for heavy-duty inference and silky-smooth video generation.

🚀 Features

🧠 WAN 2.1 Wrapper for cinematic image-to-video transformations

🔂 Two-pass generation: initial + refinement/extension

🐌 Optional Slow Motion + Frame Interpolation (RIFE, FILM, etc.)

🧽 Sharpening and Upscaling (e.g., RealESRGAN, SwinIR)

🛠️ Includes torch.compile for faster inference

🌀 Integrates Sage Attention for improved attention efficiency

📏 Customizable prompts, seed, duration, and aspect ratio logic

🌀 Final loop polish with "Extend Last Frame"

⚙️ System Specs

OS: Arch Linux (rolling release)

GPU: NVIDIA RTX 4090 (24GB VRAM)

RAM: 128 GB DDR5

Python: 3.12.9 via

pyenvComfyUI: Latest build from GitHub

torch: 2.x with

torch.compileenabledSage Attention: Enabled via patched attention mechanism

🛠️ Workflow Overview

🔹 Input & Resize

Drop an image and optionally resize to fit WAN 2.1's expected input.

🔹 WAN 2.1 Wrapper Core

Uses

torch.compilefor speed boostEnhanced with Sage Attention (set via the custom node or environment)

🔹 Pass 1: Generate + Optional Slow Motion

Frame-by-frame synthesis

Add slow motion via interpolation node (RIFE or FILM)

🔹 Pass 2: Extend + Merge

Extends the motion, ensures smoother transitions

Combines motion with refined prompt guidance

🔹 Final Polish

Sharpening and Upscaling

Final interpolation if needed

Loop-ready output by extending the last frame

🧪 Performance Tips

Tune torch compile for you system, they are all different, my setting might not work for you.

For Sage Attention:

Use the node

Running on lower-end GPUs? Disable upscaling and reduce frame count.

🧰 Requirements

ComfyUI

WAN 2.1 Wrapper Node

Optional:

RIFE,FILM, orDAINfor interpolationRealESRGAN/SwinIRfor upscalingSage Attentionpatch or node

▶️ How to Use

Load the

kiko-wan-v3.jsonfile into ComfyUI.Drop your image into the input node.

Customize prompts, duration, and frame count.

Click

Queue Promptto generate.Your video will be rendered in the output folder.

📁 Files

kiko-wan-v3.json— Exported workflow (coming soon)kiko-wan-v3.png— Workflow diagram

🧠 Inspirations & Credits

Real-ESRGAN, RIFE, FILM, Sage Attention contributors

Arch Linux + NVIDIA ecosystem for elite workstation performance 😉

💡 Future Plans

Add batch image-to-video mode

Audio?

⚙️ Custom Nodes Used in kiko-wan-wrapper-v3.json

Anything Everywhere: https://github.com/chrisgoringe/cg-use-everywhere

Display Any (rgthree): https://github.com/rgthree/rgthree-comfy

Fast Bypasser (rgthree): https://github.com/rgthree/rgthree-comfy

Fast Groups Bypasser (rgthree): https://github.com/rgthree/rgthree-comfy

GetImageRangeFromBatch: https://github.com/kijai/ComfyUI-KJNodes

GetImageSize+: https://github.com/cubiq/ComfyUI_essentials

Image Filter: https://github.com/chrisgoringe/cg-image-filter

ImageBatchMulti: https://github.com/kijai/ComfyUI-KJNodes

ImageFromBatch+: https://github.com/cubiq/ComfyUI_essentials

ImageListToImageBatch: https://github.com/ltdrdata/ComfyUI-Impact-Pack

ImageResizeKJ: https://github.com/kijai/ComfyUI-KJNodes

LoadWanVideoClipTextEncoder: https://github.com/kijai/ComfyUI-WanVideoWrapper/

LoadWanVideoT5TextEncoder: https://github.com/kijai/ComfyUI-WanVideoWrapper/

MarkdownNote: NOT FOUND

PlaySound|pysssss: https://github.com/pythongosssss/ComfyUI-Custom-Scripts

ProjectFilePathNode: https://github.com/MushroomFleet/DJZ-Nodes

RIFE VFI: https://github.com/Fannovel16/ComfyUI-Frame-Interpolation

ReActorRestoreFace: https://github.com/Gourieff/ComfyUI-ReActor

Seed Generator: https://github.com/giriss/comfy-image-saver

SimpleMath+: https://github.com/cubiq/ComfyUI_essentials

Text Input [Dream]: https://github.com/alt-key-project/comfyui-dream-project

VHS_VideoCombine: https://github.com/Kosinkadink/ComfyUI-VideoHelperSuite

WanVideoBlockSwap: https://github.com/kijai/ComfyUI-WanVideoWrapper/

WanVideoDecode: https://github.com/kijai/ComfyUI-WanVideoWrapper/

WanVideoEnhanceAVideo: https://github.com/kijai/ComfyUI-WanVideoWrapper/

WanVideoFlowEdit: https://github.com/kijai/ComfyUI-WanVideoWrapper/

WanVideoImageClipEncode: https://github.com/kijai/ComfyUI-WanVideoWrapper/

WanVideoLoopArgs: https://github.com/kijai/ComfyUI-WanVideoWrapper/

WanVideoLoraBlockEdit: https://github.com/kijai/ComfyUI-WanVideoWrapper/

WanVideoLoraSelect: https://github.com/kijai/ComfyUI-WanVideoWrapper/

WanVideoModelLoader: https://github.com/kijai/ComfyUI-WanVideoWrapper/

WanVideoSLG: https://github.com/kijai/ComfyUI-WanVideoWrapper/

WanVideoSampler: https://github.com/kijai/ComfyUI-WanVideoWrapper/

WanVideoTeaCache: https://github.com/kijai/ComfyUI-WanVideoWrapper/

WanVideoTextEncode: https://github.com/kijai/ComfyUI-WanVideoWrapper/

WanVideoTorchCompileSettings: https://github.com/kijai/ComfyUI-WanVideoWrapper/

WanVideoVAELoader: https://github.com/kijai/ComfyUI-WanVideoWrapper/

WanVideoVRAMManagement: https://github.com/kijai/ComfyUI-WanVideoWrapper/