New 1.1 versions of wan fun models

https://huggingface.co/alibaba-pai/Wan2.1-Fun-V1.1-1.3B-InP

All my examples use the 1.3b model

If looking for vid to vid wan 2.1 VACE is the way.

https://civarchive.com/models/1604221/native-vace-video-to-video-with-ref

V5.1

- tested 14b fp8 and 14b GGUFs

- added lora support 14b and inp

- added new upscale option

- Upscale can now take last frame from base to use for last frame ref.

- added silence padding to audio for lipsync

V5 - hotfix uploaded to fix INP controls for base not being connected.

- added original image to vid controls as option

- added model upscale option before upscale render.

- added workflow image save to end.

- added latentsync fill to stop if from cutting off before video end.

- many small fixes

- 3 examples with settings png workflow

V4

- added INP fun controls back as an option.

- added for last frame only option with inp model

- removed reactor/facefixing. Add yourself if you need.

- removed all impact pack nodes.

Bug to be patched soon

-Switch at bottom of last frame loader needs to connect to the resize groups Resize Image v2 to allow resizing. (rescale works fine)

I dont know if this was broken for anyone else, impact pack switch nodes are borked atm and v2.1 needed them. If you updated your impact pack it was probably broken like it was for me

These are still broken

V3 - fixes and upgrades

- removed impact pack switches, they are broken with latests updates

- using comfyroll switches

- changed from fun controls to default wan image to video

- can now use the 14b image to video wan models as well as the INP models

- Added model swap for upscale

- fixed kjnodes resizes to v2

- Probably the best interpolation between 2 images currently

Bug - if you want to do last frame only, you will need to connect the image to the resize, i forgot to add a new switch for that.

V2.1 - Small changes

- Set defaults that should work for most things

- added latentsync option

- Changed end to account for latentsync.

- Changed seed to same for both samplers

- notes explain more.

- Few small qol changes

V2

- added end frame loader allowing frame interpolation

- can use either first or last frame only or both

- added notes with models and nodes used

- Finished UI

- added switches for all groups

- fixed all bugs from 1.1 hopefully.

- fixed crops

- fixed bypass

Model

https://huggingface.co/alibaba-pai/Wan2.1-Fun-1.3B-InP/tree/main

only need the diffusion_pytorch_model.safetensors

Clip vision/VAE/textencoder

https://blog.comfy.org/p/wan21-video-model-native-support

img2vid 1.1 - Low vram fixes

- Added option to crop start frame image

- Added Purge vram nodes to 6 spots lowering vram needs by a ton.

- i think it uses as little as 6gb on renders now.

- (49% of my 12 for 512 base 81 frames)

- (63% of my 12 for 768 base 81 frames) 68% for 1.5 upscale

- changed order of final groups

- added sound adding to end.

- added switch to upscale to pass through base to end groups if bypassed.

- changed encode to tiled for upscale

Uploaded a hotfix

Fixed 2 bugs, and added rescale after the model upscale at end.

Image to vid with wan fun

https://huggingface.co/alibaba-pai/Wan2.1-Fun-1.3B-InP

Tip: can connect the ref image to the end frame to animate into the image.

Simple wan 2 image to video

Using inp model

-8 step base

-5 step upscale

-teacache

-Skip layer guidance

-lower memory image to video.

V2

- added teacache

- added shift

- added feta enhance

- added upscaler

- added florence image prompting

- added frame interpolation

- added audio

- Changed a few things with latents and info

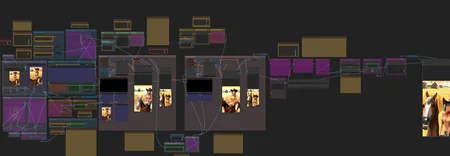

Wan 2.1 workflow

-text to vid

-gguf support

-1.3b model

-13b models

-image to vid option

-nsfw unfiltered

Image to vid GGuf links below

Models

Comfy Update

https://blog.comfy.org/p/wan21-video-model-native-support

I use the VAE from here and text encoder/clipvision

https://civarchive.com/models/1295569?modelVersionId=1463630

Main

https://huggingface.co/Kijai/WanVideo_comfy/tree/main

These vaes dont seem to work with this

I used the models from here

13b gguf TTV

https://huggingface.co/city96/Wan2.1-T2V-14B-gguf/tree/main

13b gguf ITV

https://huggingface.co/city96/Wan2.1-I2V-14B-480P-gguf/tree/main

Description

V2.1

- Set defaults that should work for most things

- added latentsync option

- Changed end to account for latentsync.

- Changed seed to same for both samplers

- notes explain more.

- Few small qol changes