So, with Illustrious V1.0 out and all the noise around it, I was REALLY curious about if it could be an improvement to our existing Illustrious Checkpoints. And since I am probably not the only one, here is a LoRA to "test/demo" what could the V1.0 bring to the table versus V0.1.

This is for testing purpose only and should not be against the licensing. It should be possible to apply it on top of other models in the on-site generator (normally, since it's a LoRA) if you don't want to test it locally.

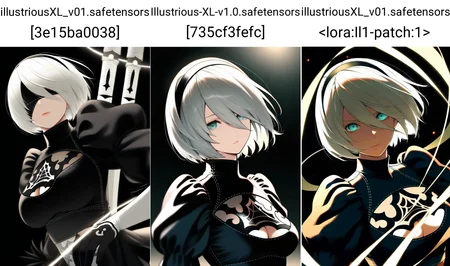

The showcase pictures are basic tests:

V0.1 / V1.0 / V0.1+LoRA

AlterBan V3 / AlterBan V3 + 0.8 x (V1.0-V0.1) / AlterBan V3 + LoRA

NB: it's the VERY heavy version. 256 dimensions. Not for everyday use. It will not give the full effect of Illustrious V1.0, but it should still give a good idea of whether you should bother with this model or not (or stick to NoobAI). My own answer is no, but you can try ;D

How it was built

Basic kohya_ss 'extract LoRA from models' script, but in order to get all the way to the result without killing my machine, i had to rent a more powerful GPU instance than my usual (with 24 VRAM+48RAM, it failed).

python extract_lora_from_models.py --sdxl --device cuda --save_precision bf16 --model_org illustriousXL_v01.safetensors --model_tuned Illustrious-XL-v1.0.safetensors --save_to Il1-patch.safetensors --dim 256

loading original SDXL model : illustriousXL_v01.safetensors

building U-Net

loading U-Net from checkpoint

U-Net: <All keys matched successfully>

building text encoders

loading text encoders from checkpoint

text encoder 1: <All keys matched successfully>

text encoder 2: <All keys matched successfully>

building VAE

loading VAE from checkpoint

VAE: <All keys matched successfully>

loading original SDXL model : Illustrious-XL-v1.0.safetensors

building U-Net

loading U-Net from checkpoint

U-Net: <All keys matched successfully>

building text encoders

loading text encoders from checkpoint

text encoder 1: <All keys matched successfully>

text encoder 2: <All keys matched successfully>

building VAE

loading VAE from checkpoint

VAE: <All keys matched successfully>

create LoRA network. base dim (rank): 256, alpha: 256

neuron dropout: p=None, rank dropout: p=None, module dropout: p=None

create LoRA for Text Encoder 1:

create LoRA for Text Encoder 2:

create LoRA for Text Encoder: 264 modules.

create LoRA for U-Net: 722 modules.

create LoRA network. base dim (rank): 256, alpha: 256

neuron dropout: p=None, rank dropout: p=None, module dropout: p=None

create LoRA for Text Encoder 1:

create LoRA for Text Encoder 2:

create LoRA for Text Encoder: 264 modules.

create LoRA for U-Net: 722 modules.

Text encoder is different. 0.01971435546875 > 0.01

calculating by svd

...

create LoRA network from weights

create LoRA for Text Encoder 1:

create LoRA for Text Encoder 2:

create LoRA for Text Encoder: 264 modules.

create LoRA for U-Net: 722 modules.

enable LoRA for text encoder: 264 modules

enable LoRA for U-Net: 722 modules

Loading extracted LoRA weights: <All keys matched successfully>

LoRA weights are saved to: Il1-patch.safetensors Description

256 dimensions LoRA with UNET+TE